I’ve rallied for years against the use of PII (or Personally Identifiable Data) as unhelpful in the privacy sphere. This term is used is some US legislation and has unfortunately made its way into the vernacular of the cyber-security industry and privacy professionals. Use of the term PII is necessarily limiting and does allow organizations to see the breadth of privacy issues that may accompany non-identifying personal data. This post is meant to shed light on the nuances in different types of data. While I’ll reference definitions found in the GDPR, this post is not meant to be legislation specific.

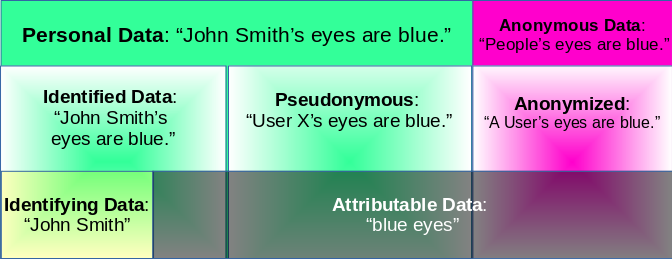

Personal Data versus Non-personal Data

The GDPR defines Personal Data as “means any information relating to an identified or identifiable natural person (‘data subject’); an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.” The key here term in the definition is the phrase “relating to.” This broad refers to any data or information that has anything to do with a particular person, regardless of whether that data helps identify the person or that person is known. This contrast with non-personal data which has no relationship to an individual.

Personal Data: “John Smith’s eyes are blue.”

In this phrase, there are three pieces of personal data. The first is the name John which is a first name related to an individual, John Smith. The second is his last name. Finally, the third is blue eyes, which also relates to John Smith.

Anonymous Data: “People’s eyes are blue.”

No personal data is indicated in the above sentence as the data doesn’t relate to an individual, identified or identifiable. It relates to people in general.

Identified Data versus Pseudonymous Data

Much consternation has been exhibited over the concept of pseudonymized data. The GDPR provides a definition of pseudonymized: “means the processing of personal data in such a manner that the personal data can no longer be attributed to a specific data subject without the use of additional information, provided that such additional information is kept separately and is subject to technical and organisational measures to ensure that the personal data are not attributed to an identified or identifiable natural person.” The key phrase in this definition is that data can no longer be attributed to an individual without additional data. Let me break this down.

Identified Data: “John Smith’s eyes are blue.”

The same phrase we used in our example for Personal Data is identified because the individual, John Smith, is clearly identified in the statement.

Pseudonymous (Identifiable) Data: “User X’s eyes are blue.”

Here we have processed the individual’s name and replaced it with User X. In other words, its been pseudonymized. However, it is still identifiable. From the definition above, Personal Data is data relating to an identified or identifiable individual. Blue eyes are still related to an identifiable individual, User X (aka John Smith). We just don’t know who he is at the moment. Potentially we can combine information that links User X to John Smith. Where some people struggle is understanding there must be some form of separation between the use of the User X pseudonym and User X’s underlying identity. Store both in one table without any access controls and you’ve essentially pierced the veil of pseudonymity. WARNING: Here is where it can get tricky. Blue eyes are potentially identifying. If John Smith is the only user with blue eyes, it makes it much easier to identify User X as John Smith. This is huge pitfall as most attributable data is potentially re-identifying when combined with some other data.

Identifying Data versus Attributable Data

In looking at the phrase “John Smith’s eyes are blue” we can distinguish between identifying data and attributable data.

Identifying Data: “John Smith”

Without going into the debate of number of John Smiths in the world, we can consider a person’s name as fairly identifying. While John Smith isn’t necessarily uniquely identifying, a type of data, a name, can be uniquely identifying.

Attributable Data: “blue eyes”

Blue eyes is an attribution. It can be attributable to a person, in the case of our phrase “John Smith’s eyes are blue.” It can be attributable to a pseudonym: “User X’s eyes are blue.” As we’ll see below, it can also be attributed anonymously.

Anonymous Data versus Anonymized Data

GDPR doesn’t define anonymous data but in Recital 26 it says “anonymous information, namely information which does not relate to an identified or identifiable natural person or to personal data rendered anonymous in such a manner that the data subject is not or no longer identifiable.” In the first example, I distinguished Personal Data with Anonymous Data, which didn’t relate to specific individual. Now we need to consider the scenario where we have clearly Personal Data which we anonymize (or render anonymous in such a manner that the data subject is not or no longer identifiable).

Anonymous Data: “People’s eyes are blue.”

For this statement, we were never talking about a specific individual, we’re making a generalized statement about people and an attributed shared by people.

Anonymized Data: “User’s eyes are blue.”

For this statement, we took Identified Data (“John Smith’s eyes are blue”) and processed in a way that is potentially anonymous. We’ve now returned to the conundrum presented with Pseudonymous data. Specifically, if John Smith is the only user with blue eyes, then this is NOT anonymous. Even if John Smith is a one of a handful of users with blue eyes, the degree of anonymity is fairly low. This is the concept of k-anonymity, whereby a particular individual is indistinguishable from k-1 other individuals in the data set. However, even this may not given sufficient anonymization guarantees. Consider a medical dataset of names, ethnicities and heart condition. A hospital releases an anonymized list of heart conditions (3 people with heart failure, 2 without). Someone with outside knowledge (that those of Japanese descent rarely have heart failure and the names of patients) could make a fairly accurate guess as to which patients had heart failure and which did not. This revelation brought about the concept of l-diversity in anonymized data. The point here is that unlike Anonymous Data which never related to a specific individual, Anonymized Data (and Pseudonymized Data) should be carefully examined for potential re-identification. Anonymizing data is a potential minefield.

If you need help navigating this minefield, please feel free to reach out to me at Enterprivacy Consulting Group