I’m giving a speech to the Temple Terrace Rotary Club this coming week and I’m sure they are expecting something fairly dry about the need to protect your privacy online and secure your passwords and such, however, I’m going to give them a little different perspective. I don’t usually write out my speeches, preferring to speak from an outline just so I make sure I touch on my major points, so what follows is an essay based on my outline.

A few years ago, when my girlfriend and I were living here in Temple Terrace we used to frequent a Pita shop over near MOSI (the museum of science and industry). Sometimes we went together and sometime we would go alone to pick up lunch or dinner. Often times, especially when she went alone, the clerk would flirt with her. No big deal, she was an attractive young woman. Well one day she receives a Facebook friend request from him. That disconcerted her…and me. How did he get her name? We finally figured out that he must have gleaned it off her credit card and searched for her on Facebook. So….what was bothersome about this? Why did she feel violated by his contact? Why was this a bit “creepy?” The clerk had exceeded the social norms associated with customer/merchant relations. He contacted her outside of the normal bounds associated with that relationship. This isn’t to say it can’t happen. I’m sure plenty of relationships have begun because people meet in the places they work. But usually, they ask permission….the waitress leaves her phone number on the check….the customer who gives the cute store clerk his business card. But those actions all give the other person the choice, the option of denying the expansion of the relationship.

Privacy is much more than just keeping secrets. My girlfriend gave him her name. She wasn’t trying to conceal it. It was his use outside of the merchant/customer relationship that was the privacy violation. Privacy does not require things be private. Privacy requires respect for context, respect for decision making.

Consider other relationships:

You tell your friends different things than you tell your boss.

You tell your spouse different things than you tell your children.

You tell your doctor different things than your waitress.

You tell your bartender everything!

If someone start inquiring about things that aren’t normally appropriate for that relationship, we get queasy. We feel unnerved. If your doctor asks about your finances or your children start asking about your sex life. Those things aren’t part of the relationship. Some friends we may share our health issues, some we don’t but if we don’t announce our health concerns on Facebook, we probably don’t want our friends to do so either. Even though we didn’t explicitly state so, when they exceed that unwritten norm that we have in our society the relationship is damaged.

A few years ago, a high school received a mailer from Target advertising maternity clothes, baby carriages and other things a would be mom might like to have. Her angry father stormed to the local Target to complain to the manager, who was profusely apologetic. A few days later, it was the father who was apologizing. It turns out the young woman was pregnant and Target knew before her father. They had used some predictive analysis based on her purchasing patterns to identify her probable pregnancy and predict her due date. Target knew not because she had disclosed it but because her purchases implied it.

Some people respond to that story, not in concern of Target’s behavior, but with the retort that children don’t have privacy from their parents. I respond that nothing can be further from the truth. As children grow up, privacy is essential to their growth as individuals. The ability to distinguish their thoughts and actions from those of their parents is what develops their person-hood. Children develop into adults as they become individuals, with their own thoughts, their ability to control their interactions with the world. They develop a sense of self, a sense of freedom from their parents all knowing action. And kids do take steps to actively claim that privacy from their parents. In the past 5 years as the wave of parents joined Facebook, kids began seeking alternative avenues of expression, where they could be free and would not have to self censor. They have moved to Tumblr and Twitter and other services not yet used by their parents or others adults in their lives. There was a fascinating article by a Dad who, for a decade, monitored the online activities of his 3 daughters. He used key-loggers, screen capture software, and other technology to totally monitor them. He learn some incredible things, not bad, but introspective and I quote “The idea that even my virtual presence on Tumblr or Twitter might prevent them from being able to express themselves or interact with their friends (some of whom they have never met) in an authentic way made me feel like I was robbing them of one of the most powerful features of the social web.”

You often hear the retort that if you aren’t doing anything wrong you have nothing to hide. The problem with the nothing to hide retort is that in presumes that the only privacy violation is a lack of secrecy. Surveillance is not about the harms of exposure, it is much broader. The problem is not Orweillian it is Kafkaesque. In Franz Kafka’s The Trial, the protagonist is arrested by a secret court, with a secret dossier doing a secret investigation. He is not allowed to see it or know how it was compiled or what it contains.

You often hear the retort that if you aren’t doing anything wrong you have nothing to hide. The problem with the nothing to hide retort is that in presumes that the only privacy violation is a lack of secrecy. Surveillance is not about the harms of exposure, it is much broader. The problem is not Orweillian it is Kafkaesque. In Franz Kafka’s The Trial, the protagonist is arrested by a secret court, with a secret dossier doing a secret investigation. He is not allowed to see it or know how it was compiled or what it contains.

The FTC is grappling with how to deal with mortgage companies that don’t necessarily advertise their services. These companies use complex predictive algorithms to predict who won’t default on loans but unlike traditional mortgage companies they aren’t beholden to the Fair Credit Reporting Act because they don’t “reject you” they just never market to you. What if you never have the opportunity to buy a mortgage because of where you live, what products you buy, who you associate with?

Orbitz was found to pitch higher priced hotels to Mac users over PC users.

People on the no-fly list are not told why they were put there and appeals are limited to those who are mistakenly identified because they share a name with a suspected individual.

Who is in control of your life?

Let’s talk about control for a bit. Companies are increasing putting out devices that they control, not you. This is either done at the behest of government or industry. It started with copyright, with VHS devices and now DVDs having technical mechanisms to stop people from copying them. Sony, a few years ago, installed a virus on millions of their CDs which when placed in a computer, prevented the computer from copying the CD but also exposed the computers to risk of other viruses. Copy machines are restricted from copying US currency. Years ago I was forced by my phone provider to upgrade my phone because mine didn’t have GPS. The FBI was behind the initiative called Enhanced 911 as a public safety measure so that when you called 911 they could identify your location. Yes, the FBI, the public safety organization. There is talk about preventing 3D printers from printing gun parts, but what about Mickey Mouse dolls or interchangeable anatomically correct Barbie parts. Apple doesn’t allow applications that show pornography in their App store….or ones that identify drones strikes in Pakistan. Google’s Android market won’t allow Ad blocker software which prevents other apps from blocking advertising and increasing your bandwidth bill.

In 2012, San Francisco’s BART shut down cell phone service in the subways system to block a planned protest.

I want to switch gears a bit and talk about risk. Risk is important when making decisions about privacy. Generally there are trade offs. We share information because there is a benefit. We tell our doctors about our heath issues to get the benefit of his professional advice. On a social scale, we allow search warrants to fight against criminal activity. There is always a balancing of privacy versus other interests, be they personal or societal. However, we are terrible at assessing risk. Risk is the probability of occurrence and the severity of the damage.

We have cognitive (mental) biases that make us prefer to get things now and discount the costs in the future. We behave irrationally. A few years ago, a study was done where people were given $10 gift cards in a mall and given the option of getting $12 card in exchange for some personal information about them, about 50% kept the $10 card. Other participants were given $12 gift cards and given option of switching to $10 cards if it was anonymous, only 10% switched. Essentially the same economic results but depending upon where they started, privacy was worth more to those who already had it versus those who could pay to acquire it.

On a societal level, privacy suffers from not being visceral enough; not vivid enough; not violent enough. Fear is a powerful motivator and we tend to fear things that are extremely low probability; terrorist attacks, child abductions; random shooters. But these things are so incredibly rare, that’s why they are so amplified out of proportion. You have a higher chance of drowning than dying in a terrorist incident. Children have a higher chance of drowning than being abducted by a stranger. Yet we spend incredible resources trying to defend against such low probability events. What else do we lose in the process? What kind of society do you want?

Prior to a few hundred years ago, the notion of privacy was very limited but so too was the notion of freedom. We lived in societies ruled by oligarchies, feudal lords, dictatorships. It is no coincidence that as we gained our privacy so too we gained freedom. As we grew into a society of individuals with power over our lives and our bodies, we no longer served the state as serfs. Privacy is not about secrecy. Privacy is freedom.

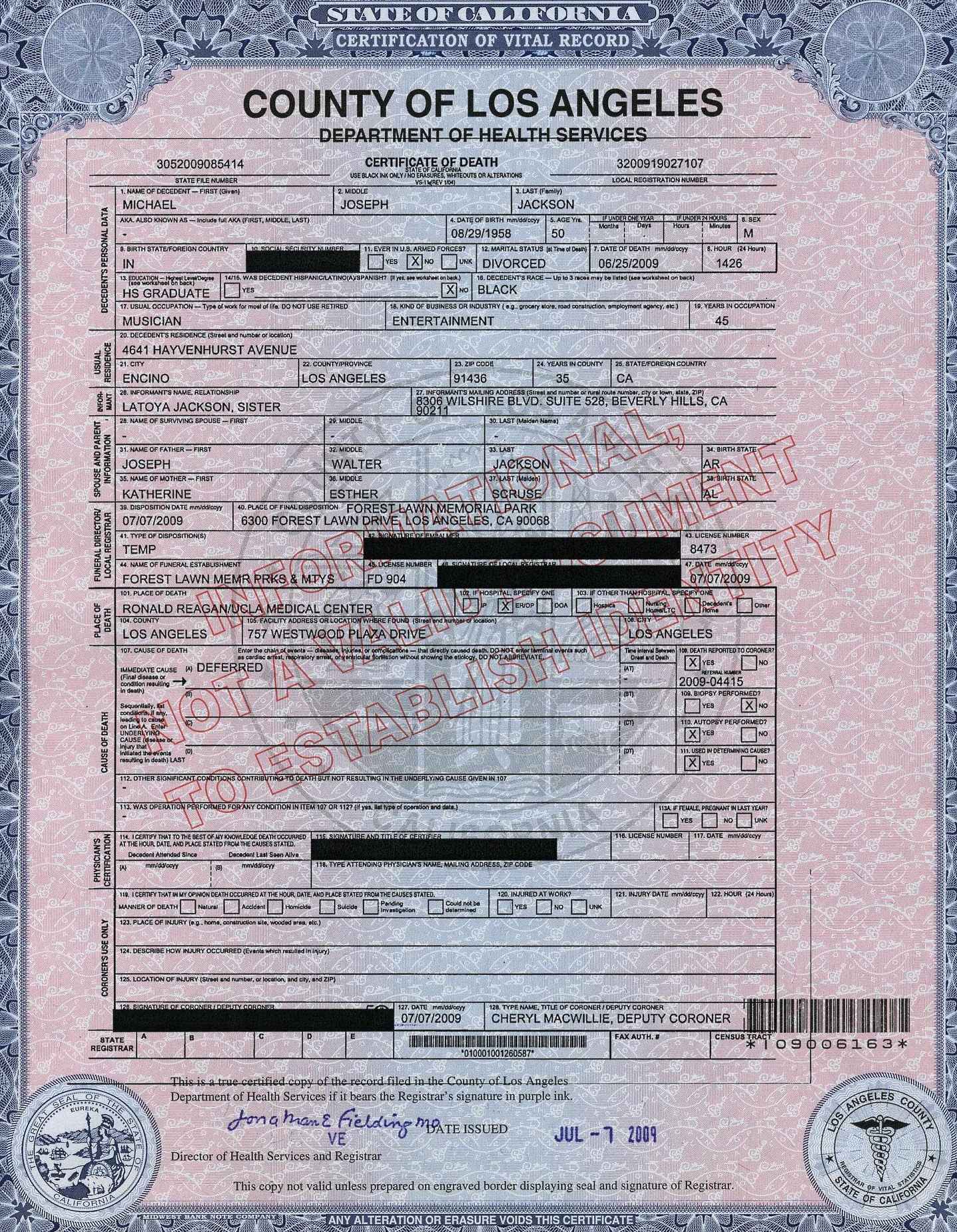

Fast forward to 2013 in which I’ve had a few friends, unfortunately, pass away recently. One friend’s death was particularly gruesome and was publicized in the local press. The other friends were more mundane and to this day I don’t know what they died of. One of those was elderly and I suspect due to health issues, though I’m unsure. The other friend was my age. In Florida, a death certificate is public record an easily obtained by paying the appropriate fee. However, only certain related person or those with an interest in the estate of the deceased may receive a death certificate which list the cause of

Fast forward to 2013 in which I’ve had a few friends, unfortunately, pass away recently. One friend’s death was particularly gruesome and was publicized in the local press. The other friends were more mundane and to this day I don’t know what they died of. One of those was elderly and I suspect due to health issues, though I’m unsure. The other friend was my age. In Florida, a death certificate is public record an easily obtained by paying the appropriate fee. However, only certain related person or those with an interest in the estate of the deceased may receive a death certificate which list the cause of