Financial privacy is most often conceptualized in terms of the confidentiality of one’s finances or financial transactions. That’s the “secrecy paradigm,” whereby hiding money, accounts, income, expenses prevents exposure of one’s activities, subjecting one to scrutiny or revealing tangential information one’s wants to keep private. Such secrecy can be paramount to security as well. Knowing where money is held, where it comes from or where it goes give thieves and robbers the ability to steal that money or resource. Even knowing who is rich and who is poor helps thieves select targets.

Closely paralleling “financial privacy as confidentiality,” is identity theft, using someone else’s financial reputation for one’s own benefit. In the US, Graham-Leech Bliley’s Safeguards Rule provides some prospective protection against identity theft, while the Fair Credit Reporting Act (FCRA), Fair and Accurate Credit Transactions Act (FACTA) and the FTC’s Red Flag Rules provide additional remedial relief.

However, as I’m found of saying in my Privacy by Design Training Workshops, “that’s not the privacy issue I want to talk about now.” All of the preceding examples are issues of information privacy. Privacy, though, is a much broader concept than that of information. In his Taxonomy of Privacy, Professor Daniel Solove categorized privacy issues into four groups: information collection, information processing, information dissemination and invasions. It’s that last category to which I turn the reader’s attention. Two specific issues fall under the category of “invasions,” namely intrusion and decisional interference. Intrusion is something commonly experienced by all when a telemarketer calls, a pop-up ad shows up in your browser window, you receive spam in your inbox or a Pokemon Go player shows up at your house; it is the disturbance of one’s tranquility or solitude. Decisional interference, may be a more obscure notion for most readers, except for those familiar with US Constitutional Law. In a series of cases, starting with Griswold v. Connecticut and more recently and famously in Lawrence v. Texas, the Supreme Court rejected government “intrusion” in the decisions individual’s make of their private affairs, with Griswold concerning contraceptives and family planning and Lawrence concerning homosexual relationships. In my workshop, I often discuss China’s one child law as a exemplary of such intrusion.

The concept of decisional interference has historical roots in US privacy law. Alan Westin’s definition of information privacy (“the claim of individuals, groups, or institutions to determine for themselves when, how, and to what extent information about them is communicated to others”) includes a decisional component. Warner and Brandeis’ right “to be let alone” also embodies this notion of leaving one undisturbed, free to make decisions as an autonomous individual, without undue influence by government actors. With this broader view, decisional interference need not be restricted to family planning, but could be viewed as any interference with personal choices, say government restricting your ability to consume oversized soft drinks. I guess that’s why my professional privacy career and political persuasion of libertarianism are so closely tied.

But is decisional interference solely the purview of government actors, then? Up until recently, I struggled with coming up with a commercial example of decisional interference, owing to my fixation on private family matters. A recent spark changed that. History is replete with financial intermediaries using their position to prevent activities they dislike and since many modern individual decisions involve spending money, the ability of an intermediary to disrupt a financial transaction is a form of decisional interference. A quick look at Paypal’s Acceptable Use Policy provides numerous examples of prohibited transactions that are not necessarily illegal (which is covered by the first line of their AUP). Credit card companies have played moral police, sometimes but not always at the behest of government, but more often being overly cautious, going beyond legal requirements. Even a financial intermediaries’ prohibition on illegal activities is potential problematic, as commercial entities are not criminal law experts and will choose risk-averse prohibition more often than not, leading to chilling of completely legal, but financially dependent, activity.

This brings me to the subject of crypto-currencies. Much of the allure of a decentralized money system like Bitcoin is not in financial privacy vis-a-vis confidentiality (though Zerocoin provides that) but in the privacy of being able to conduct transactions without inference by government or, importantly, a commercial financial intermediary. What I’m saying is not a epiphany. There is a reason that Bitcoin beget the rise of online dark markets for drugs and other prohibited items. Not because of the confidentiality of such transactions (in fact the lack of confidentiality played into the take-down of the Silk Road) but because no entity could interfere with the autonomous decision making of the individual to engage in those transactions.

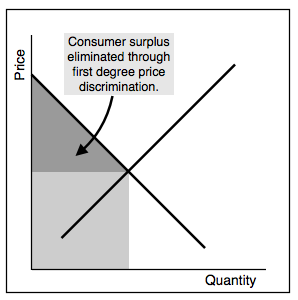

Regardless of your position on dark markets, you should realize that in a cashless world, the ability to prevent, deter or even discourage financial transactions is the ability to control a societ. The infamous pizza privacy video is disturbing not just because of the information privacy invasions but because it is attacking the individual’s autonomy in deciding what food they will consume by charging them different prices based on external intermediaries social control (here a national health care provider). This is why a cashless society is so scary and why cryptocurrencies are so promising to so many. It returns financial privacy of electronic transaction vis-a-vis decisional autonomy to the individual.

[Disclosure: I have an interest in a fintech startup developing anonymous auditable accountable tokens which provides the types of financial privacy identified in this post.]